Compute power – The way we use our workhorse toolsets is inextricably linked to the hardware we use to run them. Al Dean considers how advances in compute power are impacting the ways we work – and why the trend is likely to continue

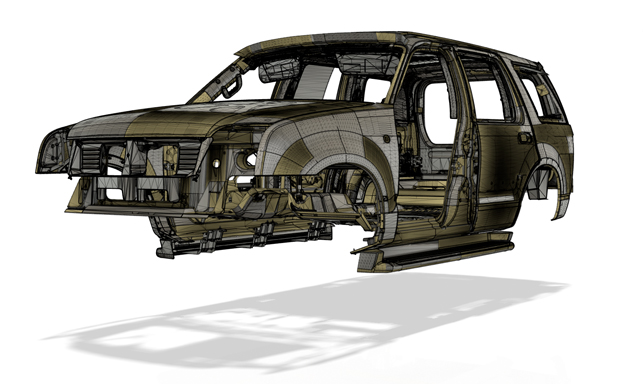

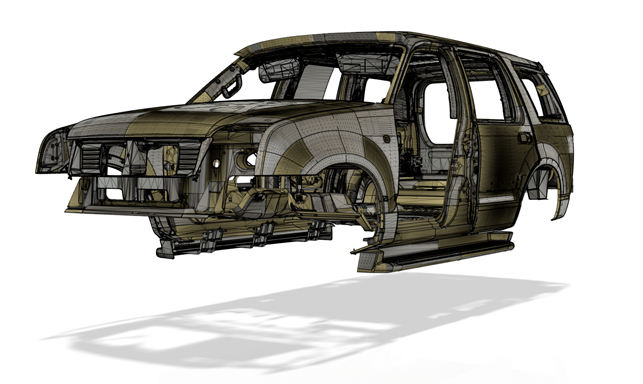

The sort of complex dataset that can make cloud-based systems weep into their server racks

During a recent chat with a design team at a global multinational, the subject came up of how that team’s members handle the compute-heavy process of rendering, during both design review cycles and for final packaging. Because they rely on a CPUbased system, they had previously set up a network rendering farm, where unused compute resources in the office were used outside of business hours to do the heavy lifting. It’s a scenario with which many of you are familiar, I’m sure.

From there, this team started requesting more portable machines, as business improved, the supply chain became more globally dispersed and, crucially, portable machines became sufficiently powerful to run all of their systems.

But during overseas trips, particularly to the Far East, problems emerged with using a VPN link back to the office network. Laptops would suddenly spring into life, without warning, as they were commandeered to help out on a rendering compute – even in the wee small hours, when the laptop was located in a hotel room some 5,600 miles away from the office.

The upshot is that this team has had to reevaluate how computation is accomplished, in a way that takes advantage of the hardware it currently uses, in order to accommodate this ‘new normal’ situation.

Another example I came across recently involved experiementing with importing heavy native datasets into a solely cloud-based design system. While the specifications said that the system should be able to handle the type of data involved (a mix of structured b-rep geometry and mesh-based data as found in a JT-like file), error messages were the closest I ever got to a completed import.

So what was going wrong? The answer is that the combination of peculiar geometry formats (surface and mesh), along with this being a pretty daft set of geometry (but by no mean atypical), meant I was constantly hitting a memory limit.

Yes, a cloud-based approach gives you access to a pretty unlimited set of processors, but those processors are trapped in pretty ordinary computing devices, stored in racks, in their thousands, in an ‘insert-name-of-cloud-provider-here’ data facility somewhere

Now, here’s the rub: in the old days, if you ran out of memory, you could buy an upgrade or, indeed, a new machine. If the project was critical, that was often a perfectly reasonable approach. But this is a new era of no installs, no expensive hardware and infinite compute power. And it’s the final item here that’s the issue.

For a while, there’s been a misconception about cloud-based systems offering ‘infinite computing’. As with all good tropes, there’s certainly an element of truth here, but with some important caveats. Yes, a cloud-based approach gives you access to a pretty unlimited set of processors – but those processors are trapped in pretty ordinary computing devices, stored in racks, in their thousands, in an ‘insert-name-of-cloud-provider-here’ data facility somewhere.

As a customer, you have no control over the specification of that hardware and, if your processing problem gets too complex, then you’re stuck. And in terms of productivity, that is just not a satisfactory place to be.

And why the trend is likely to continue

Default